Share

Data and machine learning in financial fraud prevention

Sep 4, 2025

Applying advanced analytics and data science to financial fraud detection using machine learning

In a speech addressing the American Bar Association’s 39th National Institute on White Collar Crime, Deputy Attorney General Lisa Monaco warned that artificial intelligence (AI) “holds great promise to improve our lives — but great peril when criminals use it to supercharge their illegal activities, including corporate crime.”

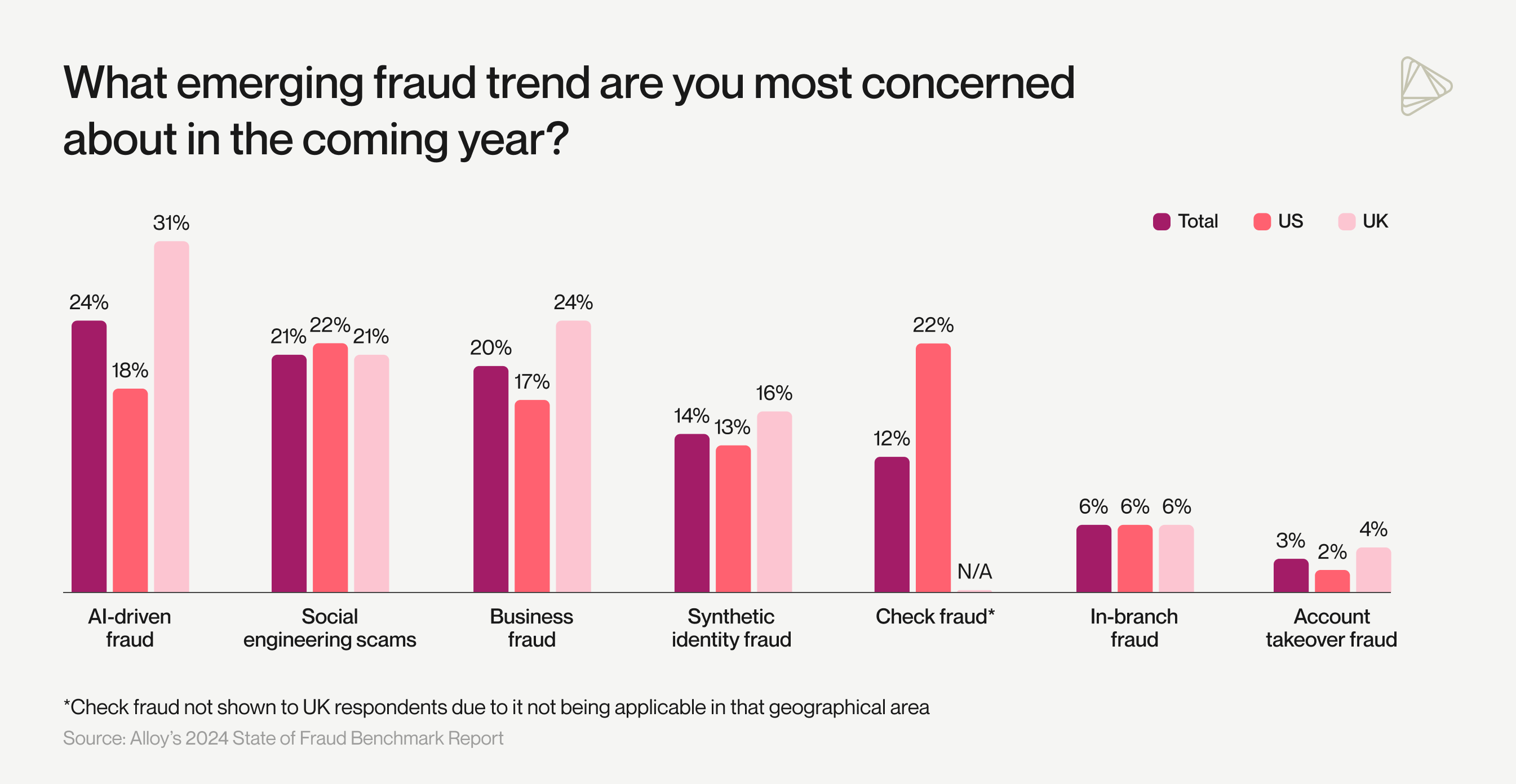

That “peril” is already being felt across financial services. According to Alloy’s 2024 State of Fraud Benchmark Report, nearly one in four financial institutions and fintechs identified AI-powered fraud as their most pressing concern that year. A year later, in the 2025 edition of our annual fraud report, 60% of institutions and fintechs experienced an uptick in fraud attempts targeting both consumer and business accounts, validating many organizations’ real-world concerns.

As fraudsters continue to execute more AI-enabled fraud attacks, the financial services industry is under pressure to adopt smarter, more adaptive machine learning-based fraud detection strategies. To accomplish this, financial organizations are fighting tech with tech, turning to risk management strategies that feature advanced machine learning (ML) and actionable AI.

This blog post explores how data and machine learning work together to form the foundation of AI fraud detection. We’ll teach you data scientist-approved strategies for improving fraud detection through ML and AI, from securing large datasets to tips for data labeling, interpretability, and handling class imbalances when training ML models.

Why is machine learning so important to modern fraud detection?

While AI is often used as a catch-all term, it’s machine learning — a subset of AI — that forms the foundation of scalable, data-driven fraud detection and prevention, especially in supervised learning applications where fraud outcomes can be labeled and learned.

As banks, fintechs, and credit unions become more effective at stopping fraudsters, they also raise the cost of each attack, forcing criminals to invest more time, tools, and coordination. To stay ahead, financial institutions are increasingly adopting predictive fraud models powered by machine learning algorithms.

ML can help with fraud detection by automating data analysis, extracting patterns, and performing anomaly detection on large datasets — a task that traditional fraud detection methods (like rule-based systems) often struggle with. These machine learning techniques are most effective when paired with complementary tactics, such as biometrics, behavioral analytics, strong authentication, and, most importantly, robust datasets that capture real-world risk signals and suspicious activity patterns.

What makes machine learning effective in fraud detection?

Here’s how financial institutions can set up their machine learning systems for real impact:

A wide dataset

One of the best ways to improve your machine learning models is to give them access to a wide range of fraud signals. The more varied your data sources, the more context your models have to spot patterns and flag unusual behavior accurately.

Identity data is at the heart of fraud detection. Training your models on both traditional data (like credit bureau and government ID information) and alternative data (such as payroll, utility, and device data) helps create more expansive, high-signal datasets. In turn, you improve your ability to verify identities and detect sophisticated fraud tactics.

Broadening your dataset also allows ML models to generalize better, reducing overfitting and improving accuracy across diverse populations. During model training, having varied data sources helps prevent bias toward specific fraud patterns and improves the detection of novel attack vectors. But with more data comes more complexity, making it essential to implement orchestration strategies that standardize, prioritize, and sequence inputs effectively.

As financial organizations expand their data inputs, maintaining strict data privacy protocols is necessary for ensuring sensitive information is protected and aligned with regulatory compliance standards.

Data orchestration

A wide dataset alone isn’t enough. Too much data can overwhelm your models or lead to rigid decisioning logic, meaning financial institutions and fintechs must also apply that data strategically.

That’s where data orchestration comes in.

Data orchestrators help sequence and combine internal and third-party data points in real time, providing a clearer, unified view of risk. They allow your system to dynamically pull in data sources rather than applying a one-size-fits-all rule. These tools enable non-linear workflows where data can be accessed at the right moment, supporting diverse use cases — from real-time credit card fraud detection to payment fraud and account takeover prevention.

For example, if a credit score comes back low, an orchestrated approach might defer that judgment until non-credit data like income or apartment rental information is evaluated. This prevents good applicants from being prematurely rejected based on a single low signal.

Orchestration also supports faster, more consistent decisioning through intelligent automation, which feeds into ML effectiveness. Because orchestrated systems standardize and structure the inputs flowing into your models, they improve the quality and consistency of training data. That makes models easier to tune, less prone to noise, and better at generalizing across edge cases.

Structured labels and outcomes

In fraud detection, labeled data tells the ML model whether a financial transaction, identity, or application was ultimately deemed fraudulent or legitimate. High-quality, consistent labeling is essential for teaching models to distinguish risk accurately and avoid bias. Unsupervised learning can also play a role by detecting fraud based on outliers and anomalies without requiring labeled outcomes.

Fraud isn’t binary, and your outcomes shouldn’t be either. Structuring labels hierarchically (e.g., fraud → account takeover → synthetic identity) gives models more nuance to learn from and enables more sophisticated predictions. Similarly, assigning risk severity levels (like low, medium, or high) helps models differentiate edge cases from urgent threats.

Well-structured outcomes also support downstream fraud operations. They make it easier for analysts to interpret predictions, for systems to escalate high-risk cases, and for models to retrain as fraud patterns evolve. In other words, labels don’t just teach the model — they create a feedback loop that keeps your fraud strategy responsive and up to date.

Optimized rulesets

Overly stringent fraud controls can frustrate legitimate customers and lead them to abandon their applications. At the same time, lax controls open the door to fraudulent activity, eroding customer trust and causing financial losses. The key is to use AI and ML to assess risk intelligently and in real time — from the moment a customer begins onboarding through every stage of their lifecycle.

While traditional methods like static rulesets may catch known fraud patterns, they often lack the flexibility and adaptability that modern ML models offer in identifying new fraud threats. This enables a seamless customer experience for legitimate users while proactively stopping fraudulent identities from engaging in malicious activity.

Financial institutions and fintechs can leverage ML and fraud data to supplement or augment traditional rule-based systems. Why is this so important? Fine-tuning your rulesets helps maximize legitimate application approvals while minimizing fraud losses, false positives, and manual reviews. The result is stronger fraud defenses and greater operational efficiency.

Requirements for applying machine learning in financial fraud detection

When optimizing rulesets with machine learning for fraud detection, it helps to understand training data, ensure interpretability, and always remain skeptical of class imbalances.

Understanding training data involves familiarizing yourself with different data types, processes, and hierarchies. Ensuring interpretability is crucial for building comfortability with your ML models. Finally, being aware of class imbalances can help you avoid misleading results that could impact the effectiveness of your fraud detection efforts.

Let's take a closer look at each of these requirements:

1. Understand the training data

Understanding training data starts with knowing data formats, processes, and hierarchies. These fundamentals help ensure your data is properly prepared, your models are well-designed, and your outputs are reliable and interpretable.

Data formats

Data formats refers to how information is structured and organized for machine learning. Common types include structured data (like tabular fields), unstructured data (like text, images, or audio), and semi-structured formats (such as JSON or XML). Knowing the strengths and limitations of each format is essential for selecting the right model and preprocessing techniques because different ML algorithms are suited for different data formats.

For example, traditional ML methods like logistic regression or decision trees typically use structured, tabular data. In fraud detection, this might include features like transaction amount, location, time, or customer demographics, with a label indicating whether each transaction is legitimate or fraudulent.

Unstructured data, such as images or text, often requires deep learning models such as convolutional neural networks (CNNs) or recurrent neural networks (RNNs). In these cases, the raw data points — pixel values, word embeddings, etc. — becomes the model input, and the model handles feature engineering automatically.

Fraud detection commonly relies on structured data like transaction history and customer profiles. But incorporating unstructured sources — such as customer behavior or social activity data — can offer additional context and improve detection of more complex fraud. The key is converting raw data into meaningful numerical representations that models can interpret.

Today, some fraud detection teams are also exploring natural language processing (NLP) to extract meaning from unstructured data sources like emails, support chats, or application notes. This is helping models flag inconsistencies or deceptive language patterns that could signal fraud.

Data processes

Fraud-focused ML models rely on clean, well-managed data pipelines. These typically include processes like data preparation, integration, orchestration, splitting, and monitoring.

Most of the ML workflow is spent cleaning and analyzing data — and for good reason, since cutting corners at this stage undermines model performance. Once initial preparation is complete, orchestration tools help bring structure to data from multiple sources and ensure consistent delivery to the model.

With robust data processes in place, fraud prevention teams can ensure their models are trained on timely, accurate data, which improves detection performance and adaptability over time.

Hierarchy of labels and outcomes

In supervised machine learning, labels are the outcomes the model is trained to predict using input data. In the context of fraud detection, this usually means determining whether a transaction, application, or account is fraudulent or legitimate.

The results of past fraud investigations are used to generate these labels, and they’re often structured in a hierarchy. For example, if an entity is confirmed to be fraudulent, that case might be categorized more specifically by type of fraud, including identity theft, account takeover, credit card fraud, or money laundering. These outcomes can also be tagged with a risk level — like high, medium, or low — to indicate the severity of the fraud.

Get to know the types of financial fraud and their definition

Deep learning models are especially effective at identifying these kinds of complex patterns because they can automatically learn from hierarchical data structures. With a large enough dataset that includes these nuanced outcomes, deep learning can spot layered relationships and evolving patterns over time.

Understanding how labels and outcomes relate — and how they’re structured — helps fraud analysts to make more informed decisions and prioritize their investigations effectively. It also supports the creation of more informative inputs for machine learning models, like aggregated risk scores, which improve accuracy over time. The more historical data patterns a model can learn from, the more adaptable it becomes to emerging fraud tactics.

2. Ensure interpretability

Interpretability in machine learning refers to the ability to understand and explain how a model makes its predictions or decisions. It involves gaining insight into the model’s internal logic, feature importance, and the reasoning behind its outcomes.

Deep learning models can achieve high accuracy, but they’re often harder to interpret, making it challenging to understand how they arrived at a particular prediction.

Source: Cambridge

In fraud risk management, simpler ML approaches, like gradient-boosted classification trees or logistic regression, can outperform Deep Neural Networks on certain tasks while being much easier to interpret. These models provide clear insights into which features are most important for making predictions and how changes in input values affect the output. Parameters can be directly tied to existing rulesets, leading to actionable opportunities for refinements.

For example, if a logistic regression model identifies certain transaction characteristics as solid indicators of fraud, these insights can be used to refine the rules in a fraud detection system. This kind of model-driven feedback loop helps ensure that systems remain both accurate and adaptable.

3. Be skeptical of class imbalances

When a machine learning model delivers promising metrics, it’s tempting to share the results right away. But relying on accuracy alone can be misleading — especially when there’s a class imbalance problem.

Class imbalance occurs when one class (i.e. fraudulent transactions) is vastly outnumbered by another (i.e. legitimate transactions). This can pose challenges when training ML models that need to learn the boundaries or patterns that distinguish "fraud" from "not fraud."

Consider, for example, a dataset of 100,000 bank accounts where only 100 are fraudulent. A model that classifies all accounts as legitimate would achieve an accuracy of 99.9% without detecting any fraud. To prevent fraud effectively, models must be evaluated on how well they detect the minority class, not just how often they’re technically “right.”

Source: ResearchGate

Fraud typically represents only a small fraction of total transactions or applications, which makes class imbalance a common challenge in fraud detection. Here are several ways to address class imbalances effectively:

Apply resampling techniques

Resample the minority class by creating synthetic examples of fraudulent transactions to balance the dataset. Resampling techniques like Synthetic Minority Over-sampling Technique (SMOTE) can generate new examples based on the existing minority class instances.

Downsampling the majority class (in other words, randomly removing legitimate transactions from the majority class) can also balance the dataset, but may lead to loss of information.

Adjust class weights

Instead of changing the data itself, you can shift how the model learns from it. Many algorithms, including Random Forest and XGBoost, allow you to assign greater importance to the minority class. This helps models pay closer attention to fraud examples and penalize misclassifications more heavily during training.

Ensure appropriate evaluation metrics

When approaching imbalanced datasets, the common accuracy measure, AUC (Area Under the ROC curve) may not be a suitable metric alone. Instead, use metrics that focus on the model's performance on the minority class, such as:

- Precision — How many flagged transactions were actually fraud

- Recall — How many fraud cases the model caught

- F1 score — A balance of precision and recall

- AUPRC (Area Under the Precision-Recall Curve) — Particularly useful for rare event detection

It is often helpful to create a confusion matrix to track false positives (good accounts misclassified as fraud) and false negatives (fraudsters misclassified as good customers).

Leverage ensemble methods

Ensemble techniques combine multiple models to reduce bias and variance, which can boost performance on imbalanced datasets.

Popular strategies include:

- Bagging (e.g., Random Forest)

- Boosting (e.g., XGBoost)

- Stacking (blending multiple model outputs)

Being mindful of class imbalance — and taking steps to address it — is key to building models that detect fraud without overflagging legitimate customers.

How Alloy supports financial fraud detection using machine learning

Alloy's identity and fraud prevention platform offers a comprehensive approach to fraud prevention by integrating with 250+ trusted third-party data sources. Alloy orchestrates internal and external identity, behavioral, and transaction data to help identify fraudulent patterns quickly and proactively.

Here’s how financial institutions and fintechs can use machine learning within Alloy:

1. Alloy-trained ML models

Alloy’s machine learning models for fraud detection are interpretable to both onboarding and ongoing workflows (including account updates, transaction monitoring, and external account linking). We built these ML models to help financial institutions and fintechs not just detect fraud, but take meaningful action to stop it.

For instance, Alloy’s Fraud Signal predicts the likelihood of fraud across an entity’s lifecycle. It offers unique insight into a customer’s risk profile by marrying onboarding signals, monetary data (like transaction velocity), and non-monetary data (such as the number of emails associated with the entity). This allows clients to identify and mitigate fraud at the individual level without adding unnecessary friction.

Fraud Attack Radar, Alloy’s newest model, is purpose-built to identify large-scale fraud attacks at the portfolio level. Rather than flagging individual applicants, it looks for unusual patterns across an entire population of applications — helping clients detect coordinated attacks that might otherwise go unnoticed. When a potential fraud attack is detected, Fraud Attack Radar alerts fraud teams in real time and enables immediate mitigation through fallback “safe mode” policies. These pre-approved workflows introduce additional verification steps to contain the threat while keeping the onboarding funnel open.

Together, these two models provide holistic fraud coverage. Both are built on Alloy’s end-to-end identity and fraud platform, meaning fraud teams can seamlessly connect insights to action without disrupting operations.

2. ML models from third-party vendors

Alloy’s API connects to over 250 data sources, giving clients access to fraud detection models from the most trusted names in identity verification, device risk, behavioral biometrics, and beyond.

By combining Alloy’s own ML-powered models with these third-party solutions, financial institutions and fintechs benefit from a comprehensive strategy where enhanced fraud detection capabilities are tailored to their specific risk prevention needs.

These integrations unlock access to specialized capabilities, including the detection of synthetic and stolen identities with precise reason codes, identifying invisible document fraud through advanced AI techniques, and ongoing litigation checks and site scanning to verify business legitimacy.

Together, these tools strengthen fraud prevention by deploying machine learning intelligence across a wider range of risk scenarios, accessible through Alloy’s unified platform.

Every data source available through Alloy is fully integrated, tested, and backed by service-level commitments — not just loosely listed in a marketplace. That means clients can trust that each solution will work reliably and deliver real results.

3. Bring your own ML model

Finally, Alloy allows you to use custom fraud models and input your own decisioning logic. Data is unified and standardized in the Alloy dashboard, making it easy to integrate DIY models into your fraud prevention workflow.

Key takeaways

If you’re building or refining your machine learning fraud strategy, here’s what to remember:

- Machine learning works best with large amounts of data that capture diverse examples of fraud and its applications. The more varied your data, the more capable your model will be of accurately flagging fraudulent behavior.

- Optimized rulesets = better decisions. ML can help strike the right balance between approving legitimate customers and stopping bad actors while reducing manual reviews and false positives.

- Understand your data before you trust your model. Knowing your formats, data flows, and label hierarchies is essential for building effective, transparent models.

- Interpretability matters. Simpler models like logistic regression or gradient-boosted trees can outperform deep learning in both accuracy and explainability, helping stakeholders trust your results.

- Class imbalance is real. Fraud is rare, and that skews performance metrics. Use techniques like resampling, class weighting, and precision-recall metrics to ensure your model actually catches what matters.

- Stay agile. Machine learning isn’t a one-and-done solution. As fraud patterns shift, Alloy makes it easy to test new data inputs, update workflows, and retrain models, so your fraud strategy keeps improving over time.

Discover Alloy’s machine learning models for fraud detection

Let us show you the ropes. Our team can make personalized suggestions to help you optimize your bank, fintech, or credit union's fraud risk policies.